I recently came across this preprint titled AI Tutoring Outperforms Active Learning by Gregory Kestin, Kelly Miller, Anna Klales, Timothy Milbourne and Gregorio Ponti from Harvard University. It turns out this was released months ago, but I only came to know about it recently through Dr Philippa Hardman’s recent post.

The key result is that students using an AI tutor learned over twice as much in less time compared to those in active-learning sessions. Students using an AI tutor also reported feeling more engaged and motivated. This, of course, needs to be unpacked.

Experimental design

The study took place at Harvard University in Physical Sciences 2 (PS2), an introductory physics class. There were 194 students included in the study. Notably, around 70% of the students were female, and the majority of the students were in their second undergraduate year.

The researchers performed a randomised, controlled study where one group learned at home with an AI tutor called "PS2 Pal", and the other group underwent an active learning session, which involves working together in self-selected groups. Importantly, PS2 Pal was carefully designed to incorporate a set of pedagogical principles such as facilitating active learning, managing cognitive load, and promoting a growth mindset.[1]

Crucially, it was not just a matter of just prompt engineering. In order to prevent hallucination, the researchers enriched the “prompts with comprehensive, step-by-step answers”.

One novel aspect was the cross-over design, which meant that everyone was able to experience the use of the AI tutor. A traditional randomised, controlled trial would require one group to miss out on the intervention, which could raise equity concerns. The experiment was done over two consecutive weeks, with one group experiencing the intervention in the first week when they were learning about surface tension, and the other in the second week when they were learning about fluids.

The results

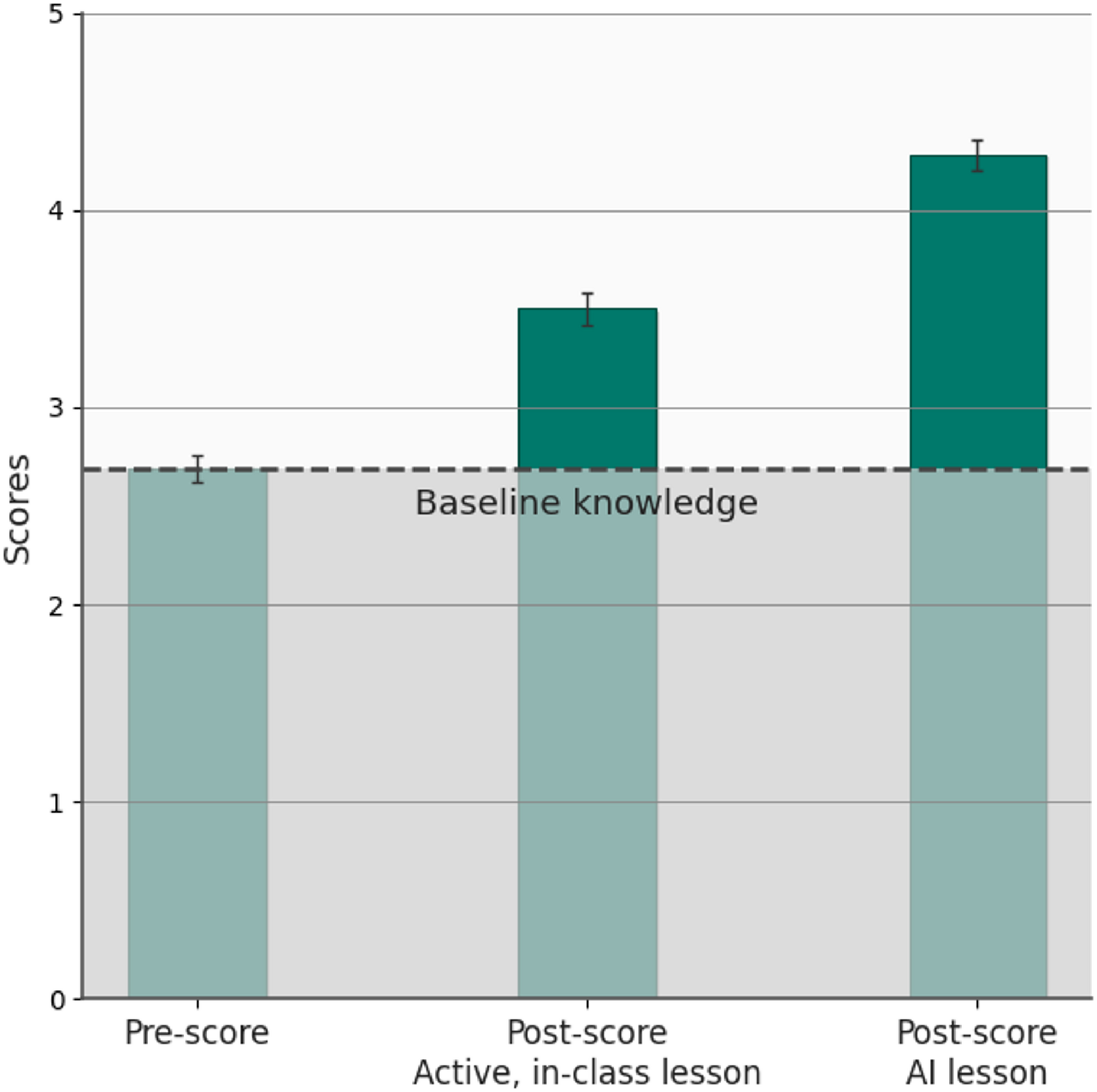

The key result is summarised in the chart below, which shows the mean score (out of 6) before and after the lesson. Students who used the AI tutor showed double the learning gains compared to the control group on average. Students who used the AI tutor scored a median of 4.5 compared to 3.5 for the control group, from a starting point of 2.75. (The median learning gain is actually more than double).

The researchers also perform a linear regression and show that the results are significant when controlling for the different variables such as prior understanding of physics and prior AI experience. Notably, those who had more prior AI experience with ChatGPT showed lower learning gains.

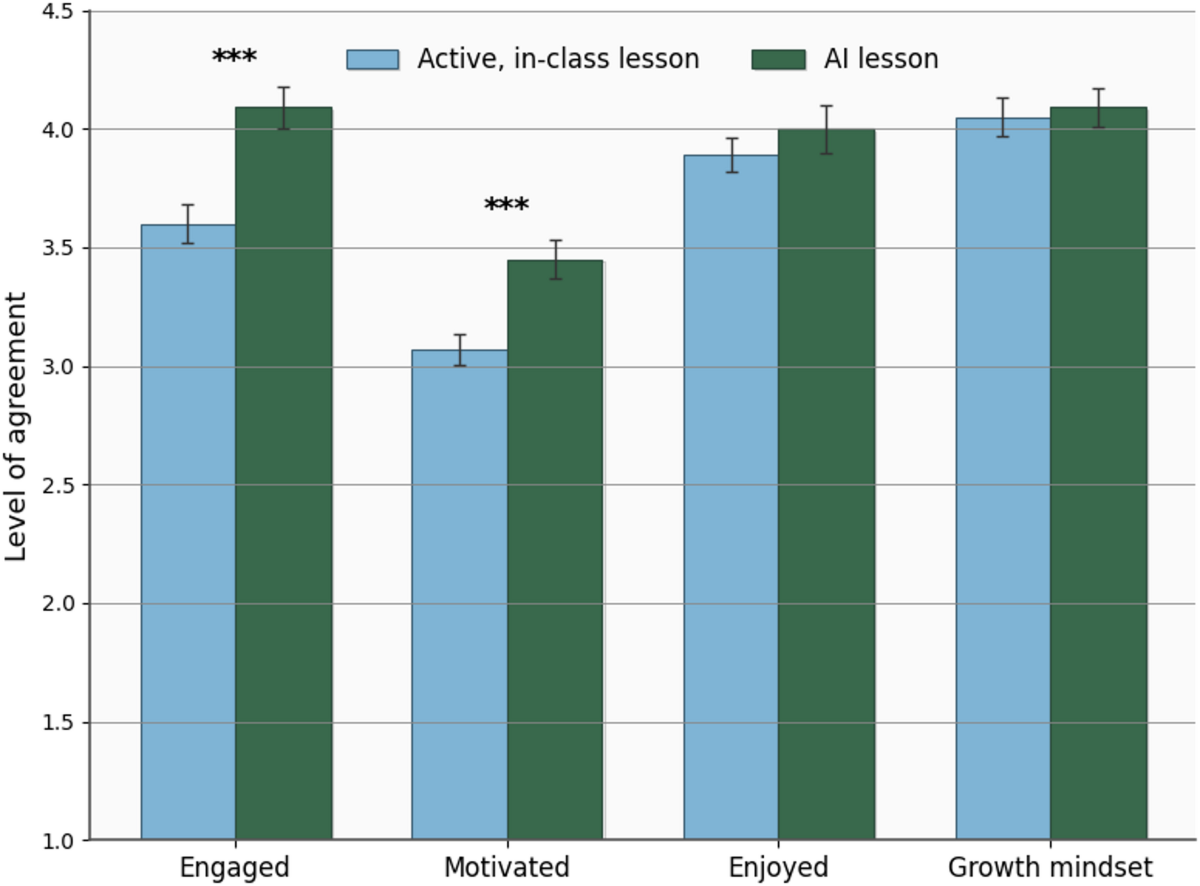

Students in the AI group were significantly more engaged and motivated. Students were asked how much they agreed with the following statements on a 5-point Likert scale, with 1 representing “strongly disagree” and 5 representing “strongly agree”:

- Engagement - “I felt engaged [while interacting with the AI] / [while in lecture today].”

- Motivation - “I felt motivated when working on a difficult question.”

- Enjoyment - “I enjoyed the class session today.”

- Growth mindset - “I feel confident that, with enough effort, I could learn difficult physics concepts".”

The chart below shows the difference in average score for each of the statements.

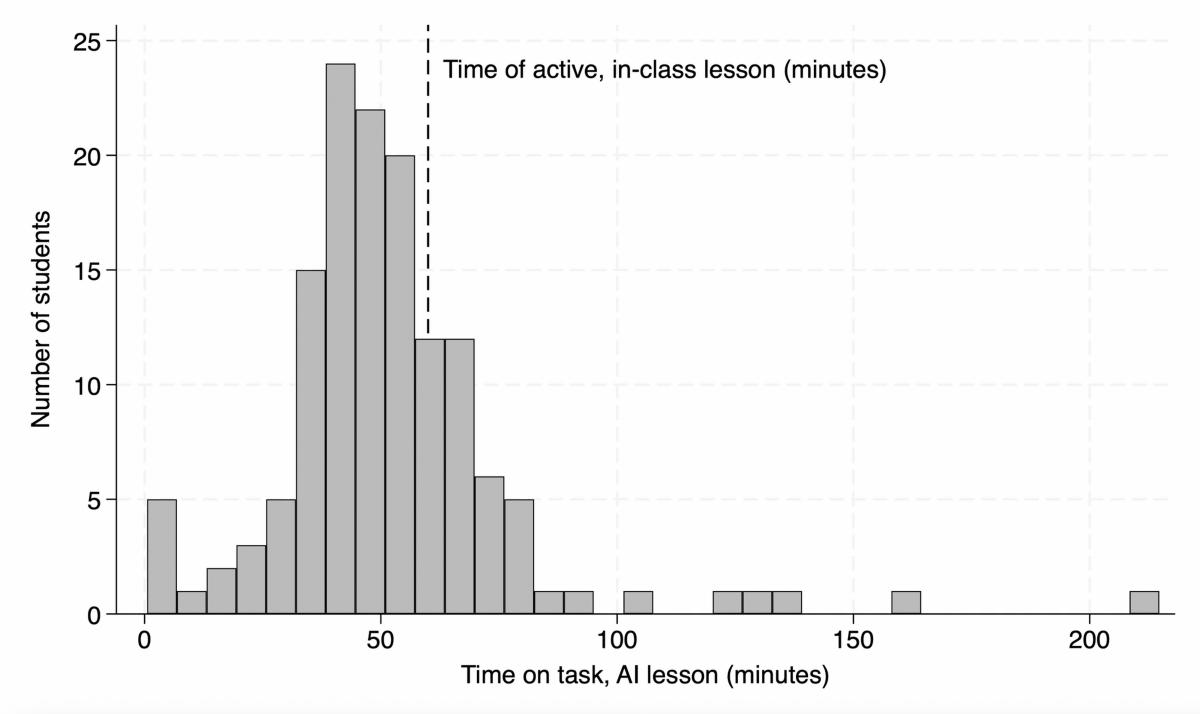

Students in the AI group completed their learning tasks in less time than to the non-AI group. The median time on task for the AI group was 49 minutes, compared to 60 minutes in the non-AI group. Personally, I’m slightly skeptical of this result because I don’t think these two numbers are comparable. The study assumes 60 minutes as the in-class learning time, but in-class distractions, peer interactions and instructor pacing can affect this. The study does not provide detail about how the students used their time on the AI platform. Going through the raw data, there are some students who spent as little as 32 seconds on the platform, and as much as 211 minutes, which is more than the double the lesson time!

Commentary on the results

I've now written about two RCTs looking at the effectiveness of LLM-based AI tutors. My overview of the other one by researchers at the University of Pennsylvania can be found here.

There is growing evidence that AI tutors can have a positive impact on learning and engagement if deployed in the right way. It is clear that AI tutors need to be aligned to pedagogical best practices in order for them to be effective. These results align with what we are seeing with our deployments at Bloom AI and it’s promising to have academic validation of the direction we are heading.

Some questions still remain:

- What access to AI was provided in the post-class quiz? Seeing as the AI group had their lesson online, it is possible that they used PS2 Pal or other AI tools for assistance.

- How generalisable are the results? Harvard is one of the top universities in the world, and the study was done with a specific subject. Do the results scale to less high-achieving students, different subjects, or different year levels?

- Does the effectiveness of AI in education change for different students? As with the other RCT, I’d love to see the results cut by demographic, behavioural or other background data.

- How do these results reconcile with the other RCT on AI tutors? The other RCT did not find any significant learning gain from AI tutoring, only improvements in assisted problem solving and perceived learning. It’s important to note that the studies had very different conditions, all of which could have contributed: type of student (secondary vs. tertiary), cultural background (Turkish students vs. American students), nature of the control (individual work vs. group work), subject (mathematics vs. physics).

- What is the impact of AI tutors on longer term learning? The current studies assess students immediately after learning. The true measure lies in whether students can retain, apply, and adapt this knowledge over time.